Unpacking AI assessments: Managing common misconceptions

Article #9 of AI in Education Article Series: May 2025

What are four common misconceptions relating to the use of AI in education? Let's explore how education providers can manage them.

Article #10 of AI in Education Article Series: June 2025.

Consider Scarlatti's practical guidance for implementing AI agents in educational settings, based on real-world pilots in vocational training.

Written by

Superpower: Romance languages

Fixations: Sunday drives

Phoebe works predominantly in social and market research, as well as monitoring and evaluation. Her projects often involve large-scale surveying and interviewing, and more recently, Artificial Intelligence in education.

She began her journey to research and evaluation in Brazil in 2020, supporting projects on social services, gender violence and education, for NGOS, governments and intergovernmental agencies. Prior to this, she worked as an English language teacher for adults.

Outside of work, Phoebe loves history, languages, animals and the outdoors. Together with her partner, she offers support services for Latin American migrants in New Zealand.

Phoebe has a Conjoint Bachelor of Arts and Commerce in Marketing (Market Research), International Business and Spanish.

In early 2025, Scarlatti developed and piloted an Artificial Intelligence (AI) agent for oral assessment. We hypothesised that this technology could improve outcomes for students with learning difficulties, who are neurodiverse, or who speak English as a second language. As of June 2025, pilots are complete and an evaluation underway. This article shares the 5 practical lessons education providers can take when piloting their own similar agent.

This article is the tenth in a series titled “AI in Education”, aimed at education providers interested in AI. The intention is for this series to act as a beginner’s guide to the use of AI in education, with a particular focus on AI agents. This series has been developed as part of a project to develop an AI agent for learner oral assessment, funded by the Food and Fibre Centre of Vocational Excellence. We invite you to get in touch to see a demo or explore how you could use AI agents in your own context.

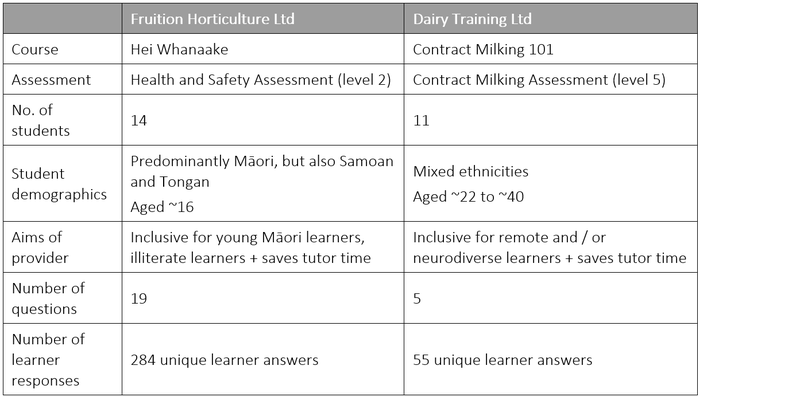

From March to May 2025, Scarlatti undertook two pilots of our AI agent for oral assessment – one with Fruition Horticulture Limited, and one with Dairy Training Limited. These are summarised below:

Below, we share five key learnings from this project.

The first lesson is the importance of assessing all aspects of feasibility before starting. In our pilots, two important factors were whether moderation would allow the AI agent to ask follow-up questions, to provide positive reinforcement, and feedback; and whether the agent could be easily integrated into the education provider's learner management system (LMS).

In your scoping phase, it is also important to bring the relevant staff onboard. We found that teaching staff and those with expertise in Quality Assurance were valuable in ensuring the AI agent met real needs and met moderation requirements. Teaching staff whose questions had been well addressed were more comfortable piloting the agent with their learners.

Our design phase highlighted how easy it is to ‘reformat’ your existing assessment questions to be oral. However, this does not unlock the real capabilities of AI, which could enable styles of assessment that were previously economically infeasible, or inconceivable. For example, it could conduct the assessment as a roleplay where the AI plays an employer, colleague, customer or client; it could conduct a debate with the learner; or it could ask the learner to swap between languages.

During development, we found two balances had to be struck. Accuracy vs flexibility: a ‘highly accurate’ agent can produce a fail grade for a learner missing a certain word despite them otherwise understanding the material, whereas a ‘highly flexible’ agent may be so open to interpreting a learner’s answer that they may be overly generous with grades. Follow-up ability vs answer security: An AI agent that has complete access to assessment answers would consistently ask follow-up questions to incorrect and vague answers, but may ‘give away’ the answer in that follow-up. In contrast, a secure agent may have no access to answers, making it incapable of asking follow-ups.

The pilot phase revealed the value in testing the agent in low-stakes environments. This is important because things can still go wrong in a pilot and students will be more anxious if the assessment is important to their final grade/results.

This series is coming to an end in July 2025. Our final articles will share results from our pilots – including grading accuracy, estimated time savings and student/staff feedback.

As we wrap up our pilot, we invite you to:

Bauer, E., Greiff, S., Graesser, A., Scheiter, K., & Sailer, M. (2025). Looking beyond the hype: Understanding the effects of AI on learning. Educational Psychology Review, 37, 1-27. 10.1007/s10648-025-10020-8.