In 2023, the Privacy Commissioner issued comprehensive guidance on the use of AI for people throughout New Zealand. This guidance sets out several expectations for agencies wanting to use AI. For example, it suggests that you:

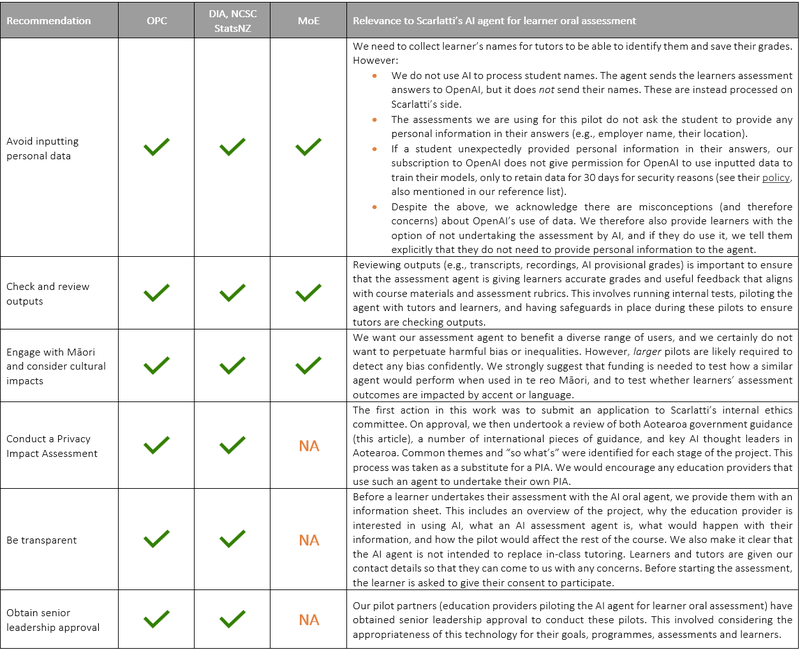

- Review whether a generative AI tool is necessary and proportionate given potential privacy impacts and consider whether you could take a different approach – Organisations should weigh up the benefits and risks of using AI before adopting this technology.

- Have senior leadership approval based on full consideration of risks and mitigations – Make sure that upper leadership is on board with rolling out AI.

- Conduct a privacy impact assessment (PIA) before using these tools – PIAs should gather feedback from impacted communities, including Māori and ask the AI provider how privacy protections have been integrated.

- Be transparent, tell people how, when, and why the tool is being used – Being upfront and giving users information about the AI tool you are using will help to maintain trust and your organisation's social license to use AI.

- Engage with Māori about potential risks and impacts to the taonga of their information – The Commissioner recommends being proactive in your engagement with Māori.

- Develop procedures about accuracy and access by individuals to their information – Have procedures in place to ensure that collected information is accurate and that your organisation can respond to requests from individuals wanting to access their information and correct it.

- Ensure human review before acting on AI outputs to reduce risks of inaccuracy and bias – To mitigate the risk of acting on inaccurate information, a person should review the output of the AI tool and assess the risk of re-identification of gathered information.

- Ensure that personal information is not retained or disclosed by the AI tool – They strongly advise against inputting any personal information into these tools unless the provider explicitly confirms that the information will not be retained or disclosed by the provider. Alternatively, they suggest stripping input data of any information that would enable users to be re-identified.

Note: Further information on what each of these expectations involves can be found in the Privacy Commissioner’s guidance and their website (including a valuable step-by-step guide on how to create a PIA).

In comparison to the Privacy Commissioner’s Guidance above, this joint guidance was created specifically for people working in the public service (2023a). It places these within two groups, which we describe below.

They strongly recommend that you:

- Don’t use GenAI tools for data classified at SENSITIVE or above – Due to the risk that these datasets could be compromised and the impact this could have on the public service, economy and wider society.

- Don’t input personal information to GenAI tools if they are external to your environment – As you risk compromising personal information and losing people’s trust and confidence in the government.

They also recommend that you:

- Avoid inputting personal data into GenAI tools in your network – Do not include personal information (including clients) when using GenAI unless all potential risks have been mitigated, or it is not possible to deidentify data.

- Prevent AI from being used as a shadow IT (IT that is not supported or approved by your workplace) - There are added security and privacy risks if shadow IT is being used in your workplace.

- Avoid inputting any information into GenAI tools that would be withheld under the Official Information Act (OIA) – If any information that is withheld under the OIA is accessed or used inappropriately, it could be very damaging to the public’s trust and confidence in the government.

- Avoid using GenAI for business-critical information, systems or public-facing channels – As GenAI can ‘hallucinate’ information and has the potential to perpetuate bias and misinformation, avoid using these agents in certain contexts.

Note: A later summary of the above guidance put forward ‘10 dos for the trustworthy use of generative AI’ in the public service (2023b). This appears to be intended to be more accessible:

- Govern the use of Gen AI robustly – Their guidance suggests obtaining senior approval for decisions regarding AI, developing an AI policy and sharing it with the Government’s Chief Privacy Officer.

- Assess and manage for privacy risk – Protect privacy by undertaking a Privacy Impact Assessment to use and test AI.

- Assess and control for security risk – Undertake security risk assessments and, if possible, opt out of tools that retain your data for training.

- Consider Te Tiriti o Waitangi – Engage with Māori when AI tools are being used for Māori data and when they may impact Māori.

- Use AI ethically and ensure accuracy – Be aware of the limitations of AI, how these tools can perpetuate bias and misinformation, and the importance of checking outputs for accuracy to avoid harm.

- Be accountable – Ensure that those making decisions about using and applying AI are accountable and that decision-makers have the relevant authority.

- Be transparent, including to the Public – Be open about when and why AI is being used. Have processes in place to respond to requests to access and correct information.

- Exercise caution when using publicly available AI – Understand the risks when using publicly available AI such as issues around quality, security and intellectual property.

- Apply the Government’s procurement principles – Abide by the procurement rules when sourcing AI tools.

- Test safely – Allow time for teams to learn how to use and trial AI. During training, use low-risk datasets and review outputs for accuracy before they are implemented

The Ministry of Education has guidance aimed at teachers and students wanting to use AI (2023). They outline four important considerations:

- Make sure to check the output – As AI can ‘hallucinate’ (give answers that seem plausible but do not make sense), you should review and check the tool’s answers.

- Consider cultural bias – AI models are built from data from across the globe, meaning that most models are built on dominant cultures and languages. As such, these tools may not accurately reflect Indigenous knowledge and are likely to not have comprehensive knowledge of Mātauranga Māori, Te Reo, Pasifika languages and Polynesian cultures.

- Do not use personal data – As some AI tools use the prompts users have asked to continue training the model, the Ministry recommends avoiding inputting personal data into the tool.

- Look over the terms and conditions – They recommend reading over the terms and conditions to check whether the AI model will use your prompts or the data you provide to train the model.

Note: In addition, the Ministry recommends that each provider has internal discussions about AI in their organisation and come up with their own policies on using this technology. Examples of education providers' AI policies can be found on Netsafe’s website.

Phoebe Gill

Phoebe Gill